-

Welcome to Phoenix Rising!

Created in 2008, Phoenix Rising is the largest and oldest forum dedicated to furthering the understanding of, and finding treatments for, complex chronic illnesses such as chronic fatigue syndrome (ME/CFS), fibromyalgia, long COVID, postural orthostatic tachycardia syndrome (POTS), mast cell activation syndrome (MCAS), and allied diseases.

To become a member, simply click the Register button at the top right.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Pacing: Very interesting HealthRising article re HR and HRV monitoring and pacing - I may finally spring for an HR/HRV monitor!

- Thread starter Mary

- Start date

wabi-sabi

Senior Member

- Messages

- 1,493

- Location

- small town midwest

Exactly! I'm sure there's gold mixed in with the straw, and I just can't see it. I'm not a data person, but it's all getting missed on a grand scale by people who are.This stuff should be so diagnostic and treatable, and I mean beyond guesswork on a forum.

wabi-sabi

Senior Member

- Messages

- 1,493

- Location

- small town midwest

I'm guessing that' the case, but sleep quality varies with my other symptoms and definitely with a crash. Poor sleep is a crash or PEM symptom. That's why I needed that little line. Now it's much harder to tell where I am in the crash timeline.That might mean that your sleep is better, but your waking symptoms are just as bad.

Exactly! I'm sure there's gold mixed in with the straw, and I just can't see it. I'm not a data person, but it's all getting missed on a grand scale by people who are.

I'm very much a data person. I already have a vague mental framework of the lowest hanging fruit - which wouldn't be wearable data, but reactions to supplements and medications.

Since we don't know if we're dealing with one disorder or twenty, there are AI algorithms that can match up patterns among people and items without knowing anything else. So for instance, with just 1,000 people saying whether a drug helped them, did nothing, harmed them, etc plus a bit of data about the person - you might be able to get an accurate prediction for your likely outcome. Basically it's the same algorithms that Amazon uses to show you products, etc.

Part of the issue is gatekeeping with regards to anything medical. They won't help us, but also won't give funding to anyone but their friends at NYU or wherever who will burn through that money like they're Afghan warlords (just with less care toward their subjects). The FDA and CDC don't care about health, but they certainly care about protecting their own fiefdoms.

wabi-sabi

Senior Member

- Messages

- 1,493

- Location

- small town midwest

I'm worried about clean data on the patient end. How do you screen out placebo/nocebo effects as well as random fluctuations in disease that patients think are due to something they did? Can data science do that?with just 1,000 people saying whether a drug helped them, did nothing, harmed them, etc

And on the healthcare end, how do you make sure patterns recognized correctly? If you let one of the FND people near the diagnosis side, we'd all be doomed.

SnappingTurtle

Senior Member

- Messages

- 258

- Location

- GA, USA

Has free tier, else $3/mo or $30/yr.Interesting. No fee for habit dash? Does it allow any custom info for pattern matching, etc? I try to track exactly how long I spend on the phone each day, some other symptoms like reflux, etc.

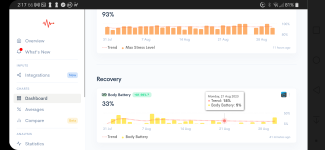

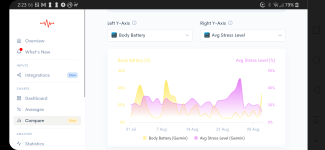

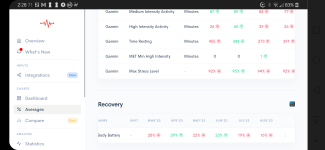

Not sure about custom pattern matching, but there is an API for Premium. Here are a few more screenshots, though.

I'm worried about clean data on the patient end. How do you screen out placebo/nocebo effects as well as random fluctuations in disease that patients think are due to something they did? Can data science do that?

This is pretty easy with enough people and intelligently selected ratings. One thing that I think sucks on Netflix is thumbs down, thumbs up, two thumbs up. It really doesn't cover how I think about movies. My ratings are: wasted time, good way to waste time but meh, good movie. I think their algorithm sucks because it just gives me one percentage score and a movie I will love and a movie I will put on in the background will get the same score.

So for this, I would offhand do a rating system:

item noticeably improved my health

item improved my health, but only a small change

neutral - nothing noticed

item negatively impacted my health, but only a small change

item noticeably negatively impacted my health

Basically it's just a five star system, but with a clear explanation of what those stars mean. This is the critical aspect of ML. It's not the algorithm that's even the most important, but it's the implementation of that algorithm for your actual goal.

In other words, even if people had random fluctuations, those are smoothed out as you get enough data points. It's more of a problem if you only have 50 people than if you have 5,000 people.

And on the healthcare end, how do you make sure patterns recognized correctly? If you let one of the FND people near the diagnosis side, we'd all be doomed.

It's easy because the algorithm could say, "67% chance patient will react with small improvement." It's hard because healthcare workers will refuse to use it because 'their expertise' is so fantastic and brilliant that no machine could duplicate it. Now, maybe lose some weight and get some exercise. And you can take off that mask - Covid is over. NEXT!

wabi-sabi

Senior Member

- Messages

- 1,493

- Location

- small town midwest

This type of question design is called a likert scale. There's a good article here if you are interested: https://taso.org.uk/evidence/evalua.../evaluation-guidance-designing-likert-scales/So for this, I would offhand do a rating system:

While it's very important to label your options correctly, it's also essential to know how your respondents will understand an interpret your labels. You can think of the 0-10 pain scale we all hate as a badly designed likert. Patients and healthcare people don't agree on what the numbers mean much of the time, so the ratings become much less useful than they should be.

This type of question design is called a likert scale. There's a good article here if you are interested: https://taso.org.uk/evidence/evalua.../evaluation-guidance-designing-likert-scales/

Interesting. I think this would generally be called 'feature engineering' in the ML world, although that applies to all designed input data.

I think my rating system would be relatively easy to understand. It's not perfect (what if an item made you worse, then made you better but you weren't sure if it was temporary, etc). It's also difficult because our responses can change over time. But as a first draft, I think it would be a good starting place. There's a balance with excessively granular data that no patient will fill out, versus excessively broad data that becomes impossible to interpret.

For instance, I can say vitamin C gives me some small improvements. Testosterone noticeably worsened my health. Magnesium gives me some small improvements. LDN seemed neutral for me - which isn't a perfect description. It noticeably made my dreams more vivid, but didn't notice much beyond that.

So this is far from a perfect tool, but right now when we read on a forum that someone responded well to probiotics or methylene blue or whatever, we have almost no way to guess how we'll respond. My guess is that there would be some patterns. The nice thing about modern ML is that it will also tell you if there's no distinctive pattern - in other words, there are ways to measure whether it can tell you the likelihood of a good or bad response with any precision.

You can think of the 0-10 pain scale we all hate as a badly designed likert. Patients and healthcare people don't agree on what the numbers mean much of the time, so the ratings become much less useful than they should be.

Yeah, the pain scale is beyond useless. That's why I also hate star ratings or number ratings. That's rarely how people judge things. No one would say, "I give methylene blue a 3.5." But I think most people could answer if it helped them, harmed them, or did nothing noticeable. Even just that would be a huge improvement from completely blind trial and error, where we have absolutely zero chance of guessing since we have zero data.

mariovitali

Senior Member

- Messages

- 1,214

@hapl808 Thanks for the mention. Please see the following thread regading the use of ML in symptom tracking :

wabi-sabi

Senior Member

- Messages

- 1,493

- Location

- small town midwest

Isn't it though? I'm sort of amazed how differently this computer stuff looks at data from the way humanities or healthcare look at data.Interesting. I think this would generally be called 'feature engineering' in the ML world, although that applies to all designed input data.

Consider that the Workwell Foundation just published a questionnaire on diagnosing PEM. Reference here: https://content.iospress.com/articles/work/wor220553

Making a questionnaire that works takes so much effort and research, it feels like half the battle, before you even start collecting data with it.

Making a questionnaire that works takes so much effort and research, it feels like half the battle, before you even start collecting data with it.

Maybe I'm biased to like ML's approach because that's closer to my background, but unlike healthcare, it has to actually work, and in many areas is easy to measure. Amazon can tweak their algorithm and see if customers buy more suggested products. Google can tweak their algorithm and see if you spend more time on Youtube or click on more search links or whatever metrics they decide to track (which has its own pitfalls of course).

I feel like there are very few people on the Comp Sci Eng path who can talk to human beings, and there are very few people in Arts or Humanities who understand Comp Sci. This creates so much misery.

You end up with AI that can detect a lie with 80% accuracy, which is then used to imprison 20% of innocent people (obviously not that breakdown exactly, but you know what I mean). A terrible use of technology (law enforcement is great at finding the worst use for any tech).

At the same time, you have AI that can already outperform any non-cardiologist at reading an EKG, and also probably 95% of cardiologists (I think it's even better now) - but they will be slow at implementing it. Which makes no sense to me because that shouldn't be a definitive tool. Instead of teaching cardiologists not to be lazy and still use their own judgment, they're using outdated tech that couldn't outperform a resident because that's what's approved.

AI studies have shown models can accurately predict Covid infections from a forced cough into a smartphone. This has been replicated numerous times over the past three years, yet we have no access to it and still thousands are dying with no effort whatsoever at infection control (other than washing hands obsessively). We have to buy RATs or pay for PCR swabs when we should have our iPhone tell us if we're infected.

Tech - move fast and break things. Medicine - move slow and continuing breaking things, but deny they're broken. Neither approach is wonderful, but both are great fields for ego run amok.

wabi-sabi

Senior Member

- Messages

- 1,493

- Location

- small town midwest

Do they look at sensitivity and specificity?AI studies have shown models can accurately predict Covid infections from a forced cough into a smartphone.

Do they look at sensitivity and specificity?

Yep, they generally look at that (some studies use more ML terminology of recall and precision which is similar, or F1 scores which combines the metrics).

Here's the MIT study from 2020. Sensitivity of 98.5%, specificity of 94.2% for symptomatic.

From Qatar in 2022, sensitivity of 96%, specificity of 95% for symptomatic.

There are several more examples, with the lowest results in the 80's for asymptomatic, but many in the high 90's even for asymptomatic.

I've thought about trying to make this myself as they are often getting good results even with small datasets. I'm not sure why zero of these have made it to market anywhere in the world, unless they are all lying about their results? Even if the USA has regulatory barriers, I'm surprised we haven't seen it in India or elsewhere. Although I guess most countries at this point want to pretend Covid is over.

Just the USA alone has spent literally billions on terrible PCR and RAT tests, some with a sensitivity of 0% for asymptomatic cases! There was a company called Curative where their FDA application was 'validated' on like 25 patients and they had 0% sensitivity on asymptomatic cases - and I believe they grossed $1b during the pandemic on testing. The FDA said it wasn't supposed to be used on asymptomatic cases, but of course every city ignored that.

@hapl808 Thanks for the mention. Please see the following thread regading the use of ML in symptom tracking :

Very interesting - I take it that Kalafatis is you?

Do you still have the mobile app for entering that data? How did you deal with symptoms - as they can vary in frequency, continuous, severity, etc.

Obviously helps to have an enormous amount of data if you're trying to figure out whether the weather or your cholesterol intake or talking on the phone affects you. Did you also examine the delayed effects - like if cholesterol intake wouldn't affect you at all on the day you ingested, but would make you feel better three days later or whatever?

Looks very promising for more individualized data.

mariovitali

Senior Member

- Messages

- 1,214

@hapl808 Yes correct.

The mobile app was just an app I found to be useful at the time which is called MyLogs Pro. I believe it still exists.

I did look for a delayed effect on the next day but not many days after. Of importance was also quality of sleep. I used binary features as much as I could because otherwise I would have needed more data.

I used Sequence Data Mining techniques to see how sequence of events may had a role and I found that tinnitus was an important feature.

The mobile app was just an app I found to be useful at the time which is called MyLogs Pro. I believe it still exists.

I did look for a delayed effect on the next day but not many days after. Of importance was also quality of sleep. I used binary features as much as I could because otherwise I would have needed more data.

I used Sequence Data Mining techniques to see how sequence of events may had a role and I found that tinnitus was an important feature.

The mobile app was just an app I found to be useful at the time which is called MyLogs Pro. I believe it still exists.

I did look for a delayed effect on the next day but not many days after. Of importance was also quality of sleep. I used binary features as much as I could because otherwise I would have needed more data.

I used Sequence Data Mining techniques to see how sequence of events may had a role and I found that tinnitus was an important feature.

I'd be very interested in discussing further if you don't mind. I've found it very difficult to find consistent patterns, especially since I think some supplements may only work when combined, etc.

I'm not terribly experienced at ML so I'm not sure if I could collect the appropriate data and analysis. Maybe if I were in better health and could work on it more, but of course ironically it's the thing that limits me to maybe 30 mins of good computer use per day that I'm trying to solve.

My thought would be to add some features that would be like "phone use one day prior" and "phone use two days prior" and things like that, rather than try to change the whole architecture to look for multi-day patterns. Some things seem to work immediately (Vit C) and some seem to have delayed crashes (cognitive exertion).

Would be interested in knowing how you approached the analysis. Thinking out loud, I'm less familiar with sequence data mining, so my first thought would probably be something like random forests or neural nets, but the delayed effects would require engineering a lot of extra features, etc. My very vague understanding is that sequence data mining might be easier for finding patterns.

Anyways, curious where you're at with this stuff as you have a lot more experience. Now that you've done it, how would you approach it if you were doing it again?

Judee

Psalm 46:1-3

- Messages

- 4,503

- Location

- Great Lakes

Just within the app store.There's a way to give feedback?! I need to do this.